Deep Neural Networks for Image Recognition

My cat, Parker, has a superiority complex.

Readers of this blog are likely to recall that I have a cat named Parker. Parker is known for several things in our house including his love for salmon, and his work at holding down our beds. I believe in Parker’s cat-mind, he sees himself like Prince Lune from the Studio Ghibli movie “The Cat Returns”.

I think he may actually be a bit more like this cat from the same movie:

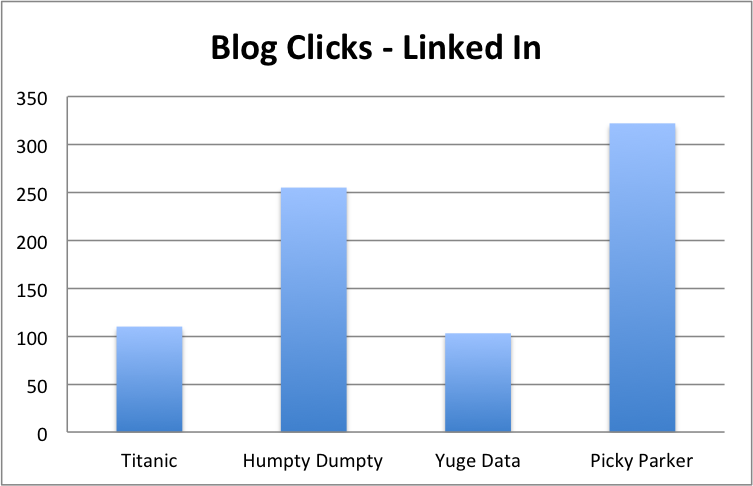

Regardless of which cat Parker most closely resembles, stats from LinkedIn show that when I mention him, my blog posts get more clicks.

BTW, check out my post on Trump’s Tweeting habits, titled “Yuge Data.” It wasn’t my most popular post, but I think it is the most interesting.

Anyway, if you like cat pictures, your in luck. This post has several. I fully expect my stats to skyrocket!

Introduction

A couple posts ago, I wrote about training neural networks to play video games. In that post, I started with trying to use a convolutional neural network, and then opted to go with a dense feed-forward neural network instead. In this post, I am going to return to talking about convolutional neural networks.

For an overview of different types of neural networks, I would recommend The Asimov Institute’s Neural Network Zoo. This site has an excellent list of the different kinds of neural networks, and what they are well suited for.

TensorFlow Image Recognition

TensorFlow.org provides several tutorial on CNNs (Convolutional Neural Networks.) These tutorials include one on Inception-v3. Unlike my other posts on neural nets, where I looked at training the models, this post actually starts with a model that has already been trained.

First, we will take a quick look at the model, and then see how it categorizes a few images.

Inception-v3 Architecture

The Inception-v3 network is a 42-layer neural network that includes several different kinds of network layers. A complete description of the network is included in this paper.

The paper also covers a number of design principles that the developers of the Inception-v3 network followed. I will not delve too deeply here, and simply point you, good reader, to the reference above for more information about the network. You may want to break out your linear algebra and linear programming text books. :)

BUT WHERE ARE THE CAT PICTURES?!

(Hang on. We’ll get to them shortly.)

Classifying images. The cute panda.

The github repo for Tensorflow models includes a ready-to-run python script that can be used to classify images using Inception-v3. The code even comes with a reference to a pre-defined image that my daughter loves.

The Inception-v3 model classifies this image as follows:

giant panda, panda, panda bear, coon bear, Ailuropoda melanoleuca (score = 0.89107)

indri, indris, Indri indri, Indri brevicaudatus (score = 0.00779)

lesser panda, red panda, panda, bear cat, cat bear, Ailurus fulgens (score = 0.00296)

custard apple (score = 0.00147)

earthstar (score = 0.00117)

From the distribution of these values, we can see that the model is pretty confident that the image is a panda. Well, of course it is. This is pretty much a slam-dunk for the network, particularly if you consider some of the features. The sharp contrast of white and black, and the clear contours leave little doubt as to what it is. But, credit where credit is due. The network pretty much nails it. Let’s continue to see how the classifier does with some of my own images.

Pen

ballpoint, ballpoint pen, ballpen, Biro (score = 0.93985)

fountain pen (score = 0.04640)

paintbrush (score = 0.00252)

rubber eraser, rubber, pencil eraser (score = 0.00156)

lipstick, lip rouge (score = 0.00156)

This was just something that I saw on my desk. The network did a pretty good job with this one. The network is pretty confident this is a ballpoint pen (not just a “pen” mind you), and it was correct.

Lego Car

racer, race car, racing car (score = 0.58064)

sports car, sport car (score = 0.18145)

car wheel (score = 0.01995)

tow truck, tow car, wrecker (score = 0.01637)

cab, hack, taxi, taxicab (score = 0.01329)

I was curious if I could confuse the network with this one. It didn’t… unless you were hoping that this would be classified as a “toy car”.

Guitar Pick

pick, plectrum, plectron (score = 0.87724)

mask (score = 0.00917)

ping-pong ball (score = 0.00602)

balloon (score = 0.00520)

carton (score = 0.00366)

I wasn’t sure how this would go, but the network seemed to do just fine. This is a guitar pick. Since I am on the theme of music, I figured I would try a few others.

Bass (6 String Ibanez)

electric guitar (score = 0.97459)

acoustic guitar (score = 0.00602)

pick, plectrum, plectron (score = 0.00213)

stage (score = 0.00116)

banjo (score = 0.00046)

Ah ha! Tricked you! This is NOT an electric guitar. It is an electric bass! A bass sounds an octave below the lowest strings on an electric guitar. At first, I thought that the 6 strings (because it is a FANCY electric bass) would fool the network. As it turns out, that wasn’t it. As we will see with the next sample…

Bass (Fender)

electric guitar (score = 0.97975)

acoustic guitar (score = 0.00783)

pick, plectrum, plectron (score = 0.00214)

stage (score = 0.00059)

banjo (score = 0.00026)

Grrr… This is an electric bass! In fact, it isn’t just any electric bass, it is a Fender Jazz Bass. It is the same kind of bass that Jaco Pastorius played. (This one is different from Jaco’s in that it was manufactured years later and is black rather than “Tobacco Sunburst”.) I am disappointed that the convolutional neural network can tell the difference between a “pen” and a “ballpoint pen”, but it cannot distinguish between a guitar and a bass. Shameful. Furthermore, “banjo?!” Seriously?! Whatever. (Heavy sigh.)

Flowers

pot, flowerpot (score = 0.22127)

earthstar (score = 0.08891)

greenhouse, nursery, glasshouse (score = 0.06861)

hip, rose hip, rosehip (score = 0.06536)

strawberry (score = 0.02462)

Now, this one surprised me. This is a flower, but not in a pot. An Earthstar is a plant, and not a flower. This is some kind of Lily, I think. We planted it years ago, and I don’t recall the name. Please email me if you know what it is.

Another interesting thing to note on this one is that “Earthstar” came up once before. If you were paying close attention, you will recall that the CNN listed Earthstar as a possible category (with very low confidence) for the “cute panda”.

Moth

hermit crab (score = 0.52525)

necklace (score = 0.11775)

hair slide (score = 0.02804)

chain (score = 0.01688)

rock crab, Cancer irroratus (score = 0.01479)

Er, what?! This surprised me. It is not even close to a hermit crab. This is a huge moth that our family saw on our recent trip to the Key West Butterfly and Nature Conservatory. We can see, however, that the networks confidence is not terribly high. You may have noticed that the same lack of confidence also happened with the previous image.

Gopher?

fox squirrel, eastern fox squirrel, Sciurus niger (score = 0.40793)

marmot (score = 0.24043)

cliff, drop, drop-off (score = 0.22557)

wombat (score = 0.01557)

koala, koala bear, kangaroo bear, native bear, Phascolarctos cinereus (score = 0.00727)

Our family came across this little guy on a recent trip to western North Dakota. The model fairly accurately identifies this creature as a kind of squirrel. It turns out that it is actually a thirteen-lined ground squirrel.

Some North Dakota folk refer to these creatures as “Gophers”. In Minnesota, where I live, we commonly think of Gophers as either the mascot of The University of Minnesota or as nasty creatures hell-bent on destruction, and not as cuddly little guys.

beaver (score = 0.88776)

porcupine, hedgehog (score = 0.01643)

marmot (score = 0.01089)

guinea pig, Cavia cobaya (score = 0.00342)

otter (score = 0.00255)

Don’t let those teeth fool you like they probably did the CNN. The image above is a real gopher!

So, if the above picture is a real gopher, what does the CNN think the U of M’s mascot is?

teddy, teddy bear (score = 0.75890)

toyshop (score = 0.01518)

nipple (score = 0.00805)

brown bear, bruin, Ursus arctos (score = 0.00512)

comic book (score = 0.00422)

Hmmm. Maybe we should just push on.

Zoe

tabby, tabby cat (score = 0.87688)

tiger cat (score = 0.08732)

Egyptian cat (score = 0.00134)

Persian cat (score = 0.00050)

lynx, catamount (score = 0.00032)

Now we’re getting to the good stuff. The above picture is Parker’s lovely sister, Zoe. The model is pretty sure that she is a cat, which she is. Just ask her. Zoe, IS a princess!

His Royal Highness, Parker

quilt, comforter, comfort, puff (score = 0.53268)

cougar, puma, catamount, mountain lion, painter, panther, Felis concolor (score = 0.07981)

lion, king of beasts, Panthera leo (score = 0.05694)

Egyptian cat (score = 0.02617)

tabby, tabby cat (score = 0.01700)

Ohhh PLEASE!!! I get the quilt or comforter thing… but cougar, puma, catamount, mountain lion?! Please don’t tell Parker, or he will start to think he is really the King of the Family!!!

Conclusion

As we have seen here, convolutional neural networks are one option for categorizing images. TensorFlow.org provides tutorials on convolutional neural networks (as well as networks of other types.) Many of the models are pre-trained, and ready to be used to analyze images.

I hope you have enjoyed this latest installment. Check back soon for more posts on topics in data science and machine learning!